Having an extensive range of services like Python libraries for machine learning, Python libraries for data science, and web development, Python continuously holds the trust of a lot of leading professionals in the fields of data extraction, collection. Python is very popular being a very high-level language with an easy flow and clear coding style. Python Based Web Crawling Libraries Image.

Python is considered as the finest programming language for web scraping because it can handle all the crawling processes smoothly. And support for the most common scraping tools, such as Scrapy, Beautiful Soup, and Selenium.Python is a high-level programming language that is used for web development, mobile application development, and also for scraping the web. With a Python script that can execute thousands of requests a second if coded incorrectly, you could end up costing the website owner a lot of money and possibly bring down their site (see Denial-of-service attack (DoS)).And sure enough, a Selenium library exists for Python.

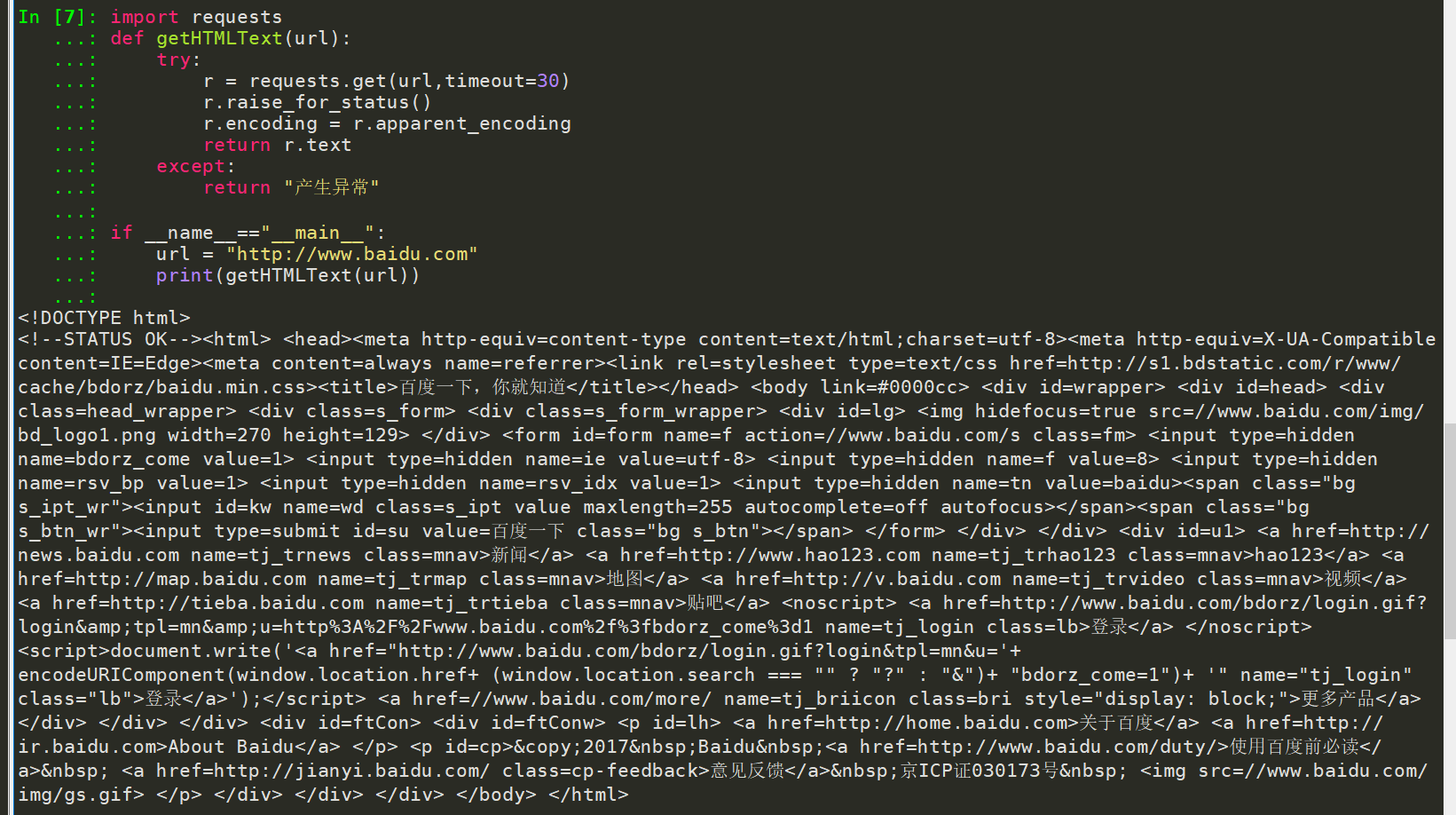

We don't want to be making a request every time our parsing or other logic doesn't work out, so we need to parse only after we've saved the page locally.How can scraping a web site be automated How can I setup a scraping project using the Scrapy framework for Python How do I tell Scrapy what elements to scrape.If I'm just doing some quick tests, I'll usually start out in a Jupyter notebook because you can request a web page in one cell and have that web page available to every cell below it without making a new request. Every time we scrape a website we want to attempt to make only one request per page. With this in mind, we want to be very careful with how we program scrapers to avoid crashing sites and causing damage.

In this example we're allowed to request anything in the /pages/subfolder which means anything that starts with example.com/pages/. A * means that the following rules apply to all bots (that's us).The Crawl-delay tells us the number of seconds to wait before requests, so in this example we need to wait 10 seconds before making another request.Allow gives us specific URLs we're allowed to request with bots, and vice versa for Disallow. Common bots are googlebot, bingbot, and applebot, all of which you can probably guess the purpose and origin of.We don't really need to provide a User-agent when scraping, so User-agent: * is what we would follow. Some robots.txt will have many User-agents with different rules.

To get use select_one('.temp.example')•. Temp.example gets an element with both classes temp and example, E.g. To get use select_one('#temp')•.

Again, note the space between. To get use select_one('.temp. Example gets an element with class example nested inside of a parent element with class temp, E.g. To get use select_one('.temp a').

0 kommentar(er)

0 kommentar(er)